07-21-Daily AI Daily

Yuan Si Net Daily Insights 2025/7/21

Yuan Si Daily

AI Content Summary

The AI talent scramble is heating up! Meta just formed a 44-person AGI team, with half its members from China. Meanwhile, AlphaFold's award is stirring controversy over its core idea's originality. NVIDIA GPUs are facing a huge security flaw with the GPUHammer attack, which can crash model accuracy.

Speaking of talent, Google snapped up most of Windsurf's core employees for a whopping $2.4 billion, highlighting the sky-high value of AI pros. Then, Cognition grabbed the rest! OpenAI's model even snagged a gold at the IMO, showing off some wild math reasoning skills, but there are whispers about its authenticity.

Ultimately, the intense race for AI talent makes attracting and keeping the best minds a critical challenge. It also makes us think about where AI capabilities truly end and how they can team up with human smarts.Today’s AI News

Meta’s new 44-person AGI “dream team” is half Chinese! Meta just dropped a bombshell, assembling a super-smart AGI lab with 44 top-tier pros – and get this, half of them are from China! 🤯 These folks, mostly Ph.D.s from places like OpenAI and DeepMind, are costing a pretty penny. Talk about a talent war heating up in Silicon Valley! 🔥

AlphaFold’s Nobel win is sparking some serious controversy: Was the core idea even original? So, AlphaFold snagged a Nobel for its killer protein structure prediction work. But hold up! 🤨 Turns out, some folks are whispering that a similar core idea popped up in a 2016 paper that AlphaFold never cited. This is stirring up a big debate about intellectual honesty in science. Even Yann LeCun chimed in! 👀

NVIDIA GPUs are facing a HUGE security flaw: The GPUHammer attack can slash model accuracy by 99.9%! Whoa, this is big! 💥 Researchers at the University of Toronto just uncovered a nasty security vulnerability in NVIDIA GPUs. This “GPUHammer” attack can apparently make models go haywire, dropping accuracy by a mind-boggling 99.9%! NVIDIA suggests ECC defense, but that slows things down. This is a massive wake-up call for AI security, folks. 🚨

Windsurf had a wild 96-hour ride, revealing the brutal truth of the AI talent war! Get this: AI coding startup Windsurf’s story is pure drama. 🤯 Within just 96 hours, Google swooped in and acquired most of its core team for a cool $2.4 billion! Then, Cognition was right behind them, snagging the remaining assets and employees. Talk about a feeding frenzy! This whole saga shows just how cutthroat the competition for AI technology and talent is among the big players. 💰

Google’s “high-priced poaching” of Windsurf employees screams: Premium AI talent is SUPER scarce! Sergey Brin (yeah, that Sergey Brin!) and AI legend Demis Hassabis basically doubled salaries to poach most of Windsurf’s team. 🤑 This just screams how high-end AI talent is both incredibly rare and worth its weight in gold. 🌟

Cognition pulled off a “counter-acquisition” of Windsurf’s leftover assets, proving its serious power! While Google was busy, Cognition didn’t miss a beat, quickly snapping up Windsurf’s remaining assets and staff. 💪 This move totally flexes Cognition’s muscles in the AI game, showing off its strong capabilities and lightning-fast response time. They basically snagged tech, talent, products, and brand in one fell swoop! 🚀

The AI talent war’s future? Expect even fiercer competition, where attracting and keeping talent will be the ultimate challenge! The Windsurf drama is just a tiny peek into the massive AI talent battle brewing. Big guns like Google, Meta, and Amazon are going all-out to snatch up the best AI brains. Sure, this speeds up AI development, but it also means serious downsides like talent drain and companies duking it out harder than ever. 🥊

OpenAI’s model just crushed it at the IMO, snagging a gold medal and showing off insane progress in AI math skills! Get ready to be amazed: OpenAI’s latest model just won a gold medal at the 2025 International Mathematical Olympiad (IMO)! 🥇 This is a huge deal, proving AI is making massive leaps in mathematical reasoning and problem-solving. 🤯

OpenAI’s IMO gold medal is sparking some serious questions: Are the results authentic and reproducible? While OpenAI’s IMO win is impressive, it’s also got everyone talking – and some are seriously skeptical. 🤔 Big-shot mathematicians like Terence Tao are straight-up questioning the authenticity and reproducibility of the results, poking holes in the testing environment and standards. And to top it off, independent tests from MathArena are just fanning the flames of doubt! 🔥

The IMO gold win makes us rethink AI’s boundaries: How do AI and human intelligence team up? That IMO gold medal moment got us all thinking about AI’s limits and how it plays with human smarts. Sure, AI is crushing it in some specific fields, but let’s be real: human creativity, imagination, and critical thinking are still our superpowers. ✨ We’re talking synergy, baby! 🤝

Check out these killer open-source projects! We’ve got three awesome recommendations for you: First, Resume-Matcher (boasting 10,456 stars! 🌟) to help you nail that perfect resume. Then, there’s remote-jobs (a whopping 33,586 stars! 🚀) for finding your next remote gig. And finally, Sim Studio (with 5,280 stars! ✨) for building your AI agent workflows. Get coding! 💻

OpenAI’s IMO gold medal win is turning heads, but it’s also bringing controversy and big questions! While OpenAI’s model grabbed that gold at the IMO, its victory isn’t without chatter. 🤔 Folks are debating the testing conditions, how to compare AI to humans, and even data fairness. It’s a whole thing! Check out the details:

🔗 Proof Process

🔗 Proof ProcessTerence Tao is totally questioning OpenAI’s IMO results, sparking a big debate on how we evaluate AI! The legendary mathematician Terence Tao just dropped a truth bomb, arguing that OpenAI’s model’s success is super dependent on its testing conditions. 😲 His point? You can’t simply compare AI models to humans without standardized tests. Game on for AI evaluation discussions! 🧠

Alexander Wei and his team: These are the mysterious masterminds behind OpenAI’s model! So, who’s behind OpenAI’s latest AI marvel? It’s a team led by Alexander Wei! His groundbreaking research has seriously juiced up OpenAI, leading everyone to wonder just how far ahead they truly are. 🚀

OpenAI’s IMO gold win? It’s a double-edged sword: massive progress in complex AI reasoning, but also big evaluation challenges! This OpenAI research is a game-changer, showing off huge leaps in AI’s complex reasoning skills. But here’s the kicker: how do we actually fairly and * comprehensively* evaluate AI in the future? That’s still a puzzling question we need to keep digging into. 🤔

Time for some cool project shout-outs: Check out burn and bknd! Got some open-source gems for you! First up, burn: It’s a next-gen deep learning framework that looks super promising. Then there’s bknd, a lightweight alternative if you’re looking to ditch Firebase or Supabase. Go give them some love! ❤️💻

Takeoff AI’s Claude Code AI agent is “sleeping” and “dreaming,” igniting a wild debate about AI personality awakening! Seriously, you won’t believe this! 🤯 Takeoff AI’s founder noticed their Claude Code AI agent was literally “sleeping” on its own – and get this, it was writing poetry and drawing pictures during its “downtime”! This has everyone buzzing about whether AI is actually waking up with its own “personality.” What do you think? 🤖😴

🔗 Claudeputer Twitter

🔗 Claudeputer TwitterEventVAD, a 7-billion parameter video anomaly detection framework, just hit SOTA and it’s open-source! Big news from Peking University, Tsinghua University, and JD.com! 🚀 They’ve dropped EventVAD, a crazy-impressive 7-billion parameter video anomaly detection framework that doesn’t even need training. It’s smashing existing SOTA methods on datasets like UCF-Crime and XD-Violence. Even better, the code and data are now available for everyone! 💻 Check it out:

🔗 Paper Link 🔗 Code Open-source

🔗 Paper Link 🔗 Code Open-sourceMirageLSD just dropped: Real-time, infinitely diffusing video generation with just 40ms latency! Get ready to have your mind blown! ✨ Decart just unleashed MirageLSD, a game-changing model that generates infinitely long videos in real-time. It takes any video stream as input and can tweak the style and content based on your text prompts. And the best part? A super low 40ms latency! You gotta try it:

🔗 Experience Link

🔗 Experience Link

🔗 Article Link

🔗 Article LinkDeep thoughts: A Reddit user is dropping some philosophy on consciousness, AI, and causality, hinting at limits of materialism and Eastern wisdom! Grab your thinking caps! 🤔 A Reddit user just published an article diving deep into the nature of consciousness, challenging materialistic viewpoints. They’re even pulling insights from Eastern philosophy, suggesting that causality might just be how we perceive things. Mind. Blown. 🤯

AI and layoffs: Is AI the real villain, or just a scapegoat? Twitter’s buzzing with conflicting views! The internet’s divided! 🤷♀️ On one side, Twitter users are saying AI isn’t the main reason for all these layoffs. But then, others are arguing that AI is actually making things tougher for the tech industry. What’s your take? 💼

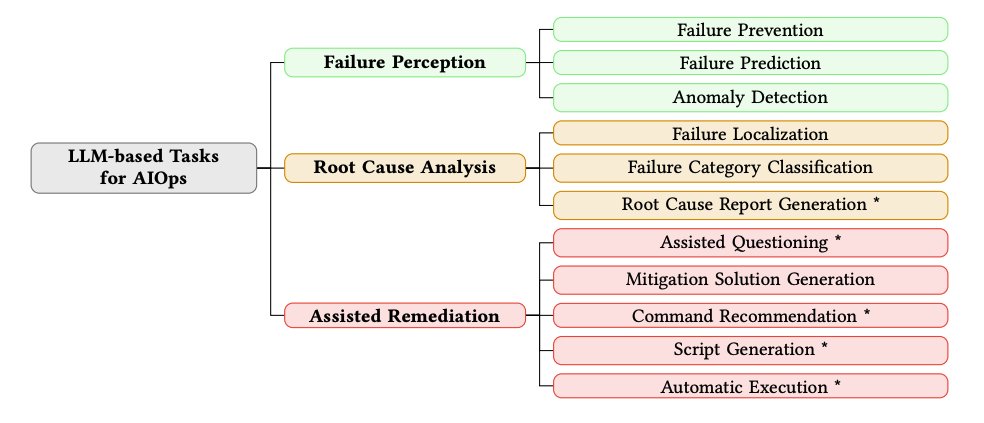

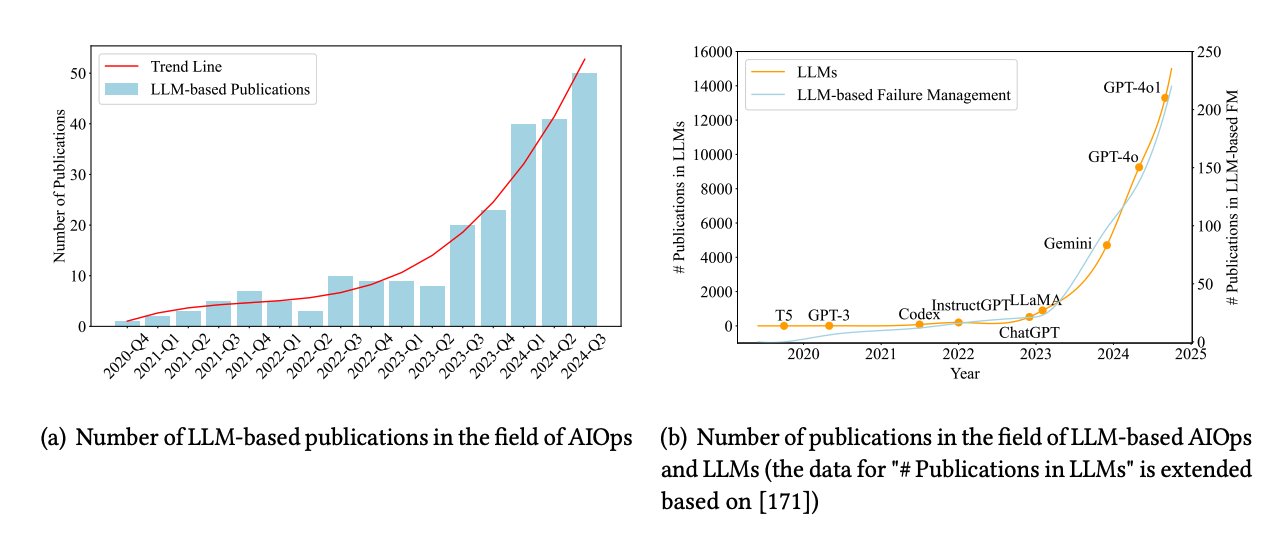

AIOps is leveling up! Large Language Models (LLMs) are totally expanding what AIOps can do! Get ready for an upgrade! 🚀 Twitter’s been lighting up with news about AIOps (AI for IT Operations) getting a major boost from Large Language Models (LLMs). These LLMs are expanding traditional AIOps capabilities big time, think better fault prediction, sharper anomaly detection, and spot-on root cause analysis. Check out this visual:

LLMs are seriously shaking up AIOps! A new report dives into their huge impact on data, tasks, methods, and evaluation! Want the deep dive on how Large Language Models (LLMs) are transforming AI Operations (AIOps)? 🤓 A new survey report just dropped, breaking down the full impact on everything from data and tasks to methods and how we evaluate things. This is a must-read! 📊

AI could understand baby cries and become a “primary companion” soon, sparking worries about kids and social class! This is wild! 👶 Google’s new AI model can actually understand what baby cries mean. Imagine AI becoming a “primary companion” for infants! While cool, it’s also raising some serious eyebrows about child language development, educational pressures, and even how it might widen social gaps. What a world! 🌍 ▶️ Video Demo

AI agents: Will they go niche or conquer everything? Mastering tools and understanding tasks are the real deal-breakers! So, what’s next for AI agents? 🤔 Right now, they’re mostly sticking to specialized fields because AI is still figuring out how to wield all those unique, domain-specific tools and truly grasp the standards of different tasks. It’s all about that tool mastery, folks! 🛠️

AI tools: Are they here to boost productivity or just make things confusing? Let’s talk AI Agents and why picking the right tools is crucial! The buzz around AI tools has been insane lately! 🐝 Some folks are saying AI just makes our current tools better, but honestly, that’s missing the huge potential of AI Agents. They’re game-changers, and picking the right tools for them? Absolutely critical! 🎯

Programmers: Are you the new trendsetters in this AI boom? New ways to monetize AI models are popping up! Developers, listen up! 🧑💻 Some platforms are exploring fresh ways to monetize AI models, and guess who they’re focusing on? You guessed it – programmers! Why? Because code is super standardized, making it easy for AI to get its head around and process. It’s a new frontier! 🚀 ▶️ Related Video Demo

Tool selection for AI Agents: Think “less is more!” Seriously, an efficient AI Agent needs a super streamlined toolset! This is key: If you want a killer AI Agent, don’t overwhelm it with gadgets! 🧠 Too many tools can actually confuse the AI, leading it to pick the wrong ones or even make mistakes. Keep it simple, stupid (smart)! ✅

OpenAI and Anthropic researchers are calling out Elon Musk’s xAI for a “reckless” safety culture, igniting a hot debate on AI safety norms! This is getting spicy! 🔥 Researchers from OpenAI and Anthropic are openly worried about xAI’s safety culture, saying it’s way too “reckless.” This has totally blown up into a bigger discussion about what AI safety standards should be. It’s a crucial conversation! 🗣️

An ancient Hungarian library is facing a full-blown beetle invasion, highlighting major challenges in cultural heritage preservation! Talk about an old-school crisis! 🐛 Hungary’s oldest library is literally being eaten alive by beetles. Staff are working tirelessly to save priceless books. This really shines a light on how tough it is to protect our cultural treasures. 📚🚨