07-23-Daily AI Daily

YuanSi Network Insight Daily 2025/7/23

YuanSi Daily

AI Content Summary

Netflix is leveraging AI to boost film and TV production efficiency, slashing costs and speeding up special effects tenfold. iFlytek just launched the world's first local large model office notebook, the X5, boasting powerful offline AI features and a strong focus on privacy. A study revealed that 72% of US teens have tried AI chatbot companions, reflecting their need for connection and exploration of new tech.

AI is making huge waves in physics, shifting from a mere efficiency tool to a true cognitive partner, revolutionizing experiment design and data analysis. An Oxford team developed the Graphinity model to predict antibody-antigen binding strength with high accuracy, though data scarcity remains a challenge.

Reinforcement learning expert Sergey Levine emphasizes that real-world data is crucial for training robot models, while alternative data sources are just supplementary. DeepMind's advanced Gemini model, Deep Think, clinched gold at the IMO competition, highlighting the critical importance of tech ethics.Today’s AI News

Netflix is totally slaying the game by leveraging AI to supercharge its film and TV production! 🚀 Specifically, for shows like the Argentinian hit《The Eternals》, using Generative AI (GenAI) has slashed costs and boosted efficiency tenfold. This means advanced effects like “de-aging” tech, once exclusive to big-budget productions, are now a breeze. Netflix isn’t stopping there; they’re diving deep into AI for personalized recommendations, search, and ads to make your viewing experience even better.

But, full disclosure, this AI boom is sparking some serious concerns about its impact on industry pros.

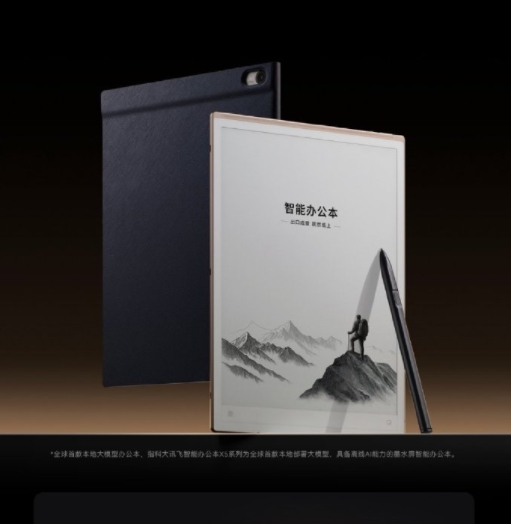

But, full disclosure, this AI boom is sparking some serious concerns about its impact on industry pros.iFlytek just dropped a bombshell, launching the X5, the world’s first smart office notebook powered by a local large model! 🤯 Priced from 4999 yuan, its killer feature is its offline AI capabilities, letting you crush tasks like offline noise reduction, voice transcription, multi-speaker transcription, Chinese-English translation, and meeting minute generation, even when you’re off the grid. With an 8-core CPU and 9T NPU computing power, it’s over 50% faster. The meeting recording function boasts 360-degree omnidirectional pickup, a 98% accuracy rate for Mandarin, and supports various dialects and foreign languages. Plus, privacy is a big deal for them, so it comes with a physical offline toggle and a secure note vault. Pretty sweet! 🔒

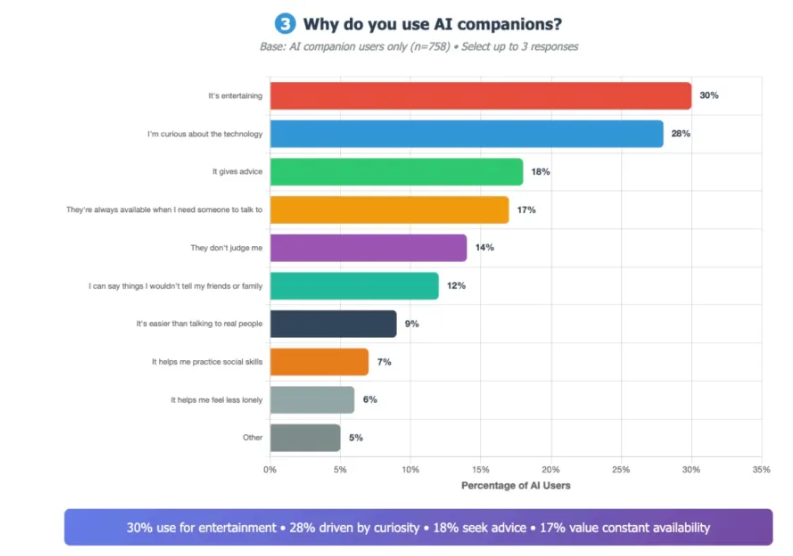

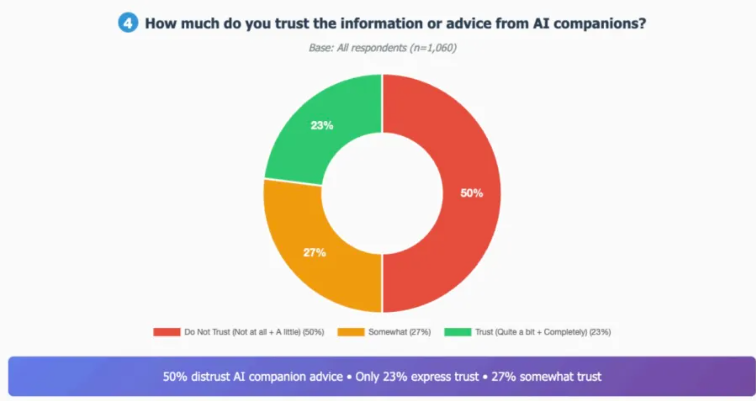

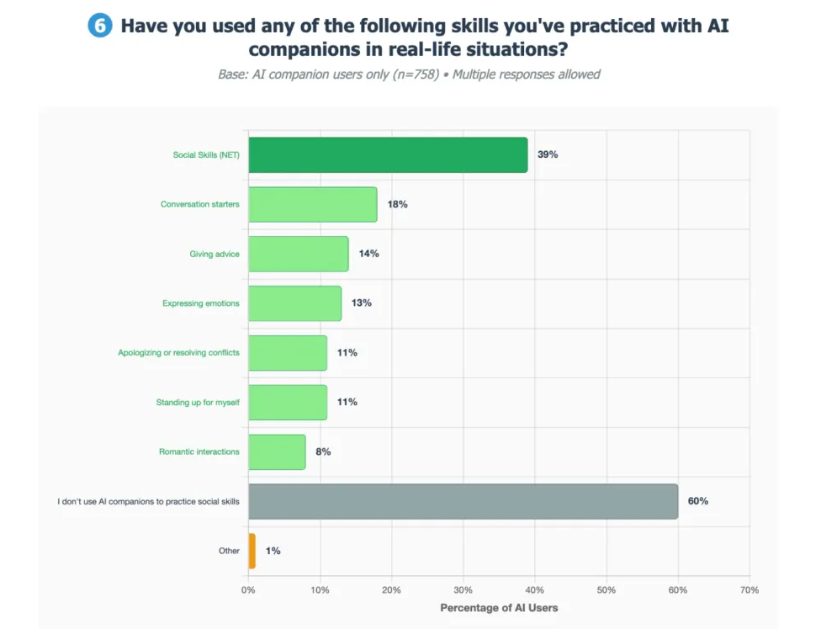

Hold up! A whopping 72% of US teens have actually tried AI chatbot companions like Character.AI and Replika! 😲 Why? Mostly for kicks, curiosity, getting advice, and just because AI is always there, no questions asked. A third of these teens (33%) are even using AI for social interaction, with some finding chats with AI more satisfying than with their actual friends! 🤯 This clearly shows teens are craving connections and are super keen on new tech. But hey, we gotta keep an eye on how this impacts their mental health and make sure they can spot fake info from a mile away. Stay woke! 👀

Okay, job hunters, listen up! Resume-Matcher (🔗 Project Repository) is your new secret weapon! 🤩 This bad boy helps you level up your resume game by analyzing it, dishing out improvement tips, and recommending killer keywords. It’s already racked up 13,958 stars – pretty solid!

Looking to get your finances in order? Check out Maybe (🔗 Project Repository)! 💸 This super user-friendly personal finance app is making waves, boasting a whopping 50,444 stars!

Need an AI buddy that plays nice everywhere? NextChat (🔗 Project Repository) is your go-to! 🚀 This lightweight, cross-platform AI assistant has already snagged a massive 84,831 stars.

Dreaming of working from anywhere? The

remote-jobs(🔗 Project Repository) repo is a goldmine! 🌟 It’s packed with a ton of tech company remote job openings and has already earned over 35,000 stars.Devs, if you’re building with TypeScript, you gotta check out

better-auth(🔗 Project Repository)! 💪 This robust TypeScript authentication framework has already garnered over 17,000+ stars.Got network issues?

trippy(🔗 Project Repository) is here to save the day! 🌐 This handy network diagnostics tool has snagged over 5,000+ stars.For all you OSINT pros out there,

blackbird(🔗 Project Repository) is a must-have! 🕵️♀️ This powerful OSINT tool has already amassed 4,001 stars.Need to convert files? ConvertX (🔗 Project Repository) has got your back! ✨ This self-hosted online file converter supports over 1,000 formats and boasts 5,285 stars.

Wanna get your CSS skills on point? Check out

CSS Exercises(🔗 Project Repository)! 🧑💻 This project is designed for learning CSS and has already gathered 2,073 stars.Hold onto your lab coats, folks! AI is totally reshaping physics experiments, moving from just a handy tool to a full-blown “cognitive partner” for exploring the unknown! 🤯 In recent years, AI has been a game-changer for experiment design and data analysis in physics. For example, AI is being used to fine-tune the sensitivity of LIGO gravitational wave detectors, simplify quantum “entanglement swapping” experiments, and crunch experimental data to hunt for dark matter and Lorentz symmetry violations. 🔗 Related Paper🔗 Related Paper🔗 Related Paper🔗 Related Paper With its unique, sometimes “weird” solutions, AI is seriously pushing scientists to rethink the boundaries of their fields and dive into deeper scientific mysteries. How cool is that? ✨

Get ready for some next-level science! An Oxford University team has developed Graphinity, a groundbreaking model designed to predict antibody-antigen binding affinity (ΔΔG)! 🧬 By building a massive dataset of nearly a million data points, they’ve achieved super high-accuracy predictions (Pearson Correlation Coefficient r > 0.85). In tests with 36,391 experimental data points, it hit an impressive ROC AUC of 0.90 and an Average Precision (AP) of 0.82. Their research totally highlights that dataset diversity is way more critical than just mutation location. But even with a million-plus data points, they’re still facing the classic challenge of data scarcity. Wild!

Listen up, reinforcement learning guru Sergey Levine is dropping some truth bombs: training robot models needs a boatload of real-world interaction data! 🤖 He points out that simulated data, human videos, and even data from handheld gripper devices have their limits. Why? Because they often pre-program task completion strategies, which totally cramps a model’s generalization ability. Levine is super clear: real-world data is non-negotiable, and alternative data is just a nice-to-have supplement. Got it?

Mic drop! DeepMind’s advanced Gemini model, Deep Think, just snagged gold at the IMO competition! 🥇 It crushed five problems, not by relying on fancy programming languages, but by just understanding natural language. Mind-blowing! DeepMind’s cautious approach to releasing this gem really stands out, especially when compared to OpenAI’s “release it early” vibe. It totally screams how crucial tech ethics are in this wild AI world. Check out the 🔗 OpenAI IMO Proofs!

Get ready for a huge leap in robot tech! Researchers from Hong Kong Polytechnic University and others are rocking the world with the Behavior Foundation Model (BFM) for humanoid robot Whole-Body Control (WBC)! 🤖 This BFM lets robots pick up general motor skills through massive pre-training, so they can quickly adapt to all sorts of new tasks. So cool!

Looking ahead, BFM could totally team up with Large Language Models (LLM) and large-scale visual models (check out the 🔗 Project Repository). But, full transparency, this tech still has some hurdles, like the Sim2Real gap, data scarcity, and the big one: differences in robot morphology.

Looking ahead, BFM could totally team up with Large Language Models (LLM) and large-scale visual models (check out the 🔗 Project Repository). But, full transparency, this tech still has some hurdles, like the Sim2Real gap, data scarcity, and the big one: differences in robot morphology.

Heads up, businesses! 01.AI (Zero-One Wànwù) has just rolled out its enterprise-grade AI Agent, aiming to create “super employees”! 🚀 Featured in the 2.0 version of their Wanzhi Enterprise Large Model One-Stop Platform, this AI Agent is designed to tackle all sorts of complex tasks for companies, like coding, research, and system access, plus it supports private deployment. Sweet!

It’s already making waves in various sectors, including investment attraction, finance, sales, and gaming. Talk about versatile!

It’s already making waves in various sectors, including investment attraction, finance, sales, and gaming. Talk about versatile!So, what’s the deal with the credibility of AI Journal? 🤔 Someone on Reddit actually sparked a debate about it, and the consensus is clear: always cross-reference your info and don’t just rely on one source. Smart move! ✅

You won’t believe this! A Reddit user accidentally turned their Large Language Model (LLM) into a “future-seeing angel”! 🤯 They shared their wild experience, which has everyone buzzing about the true limits of LLM capabilities. Talk about next-level AI!

Heads up, business pros! Unicorn companies in a bull market often get caught up in fierce price wars, all chasing that juicy Annual Recurring Revenue (ARR) growth. 💸 But here’s the kicker: this often leads to some serious losses. The takeaway? A sustainable, profitable business model totally beats blindly chasing rapid growth. It’s about playing the long game! 🐢

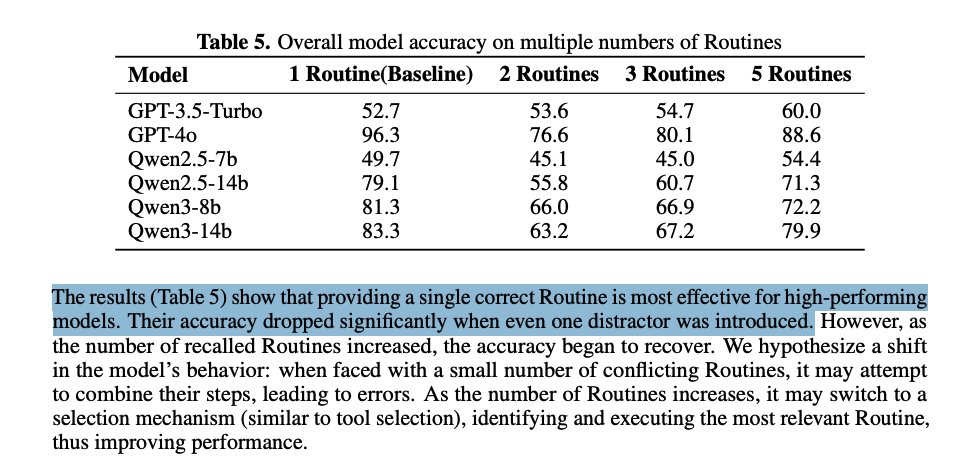

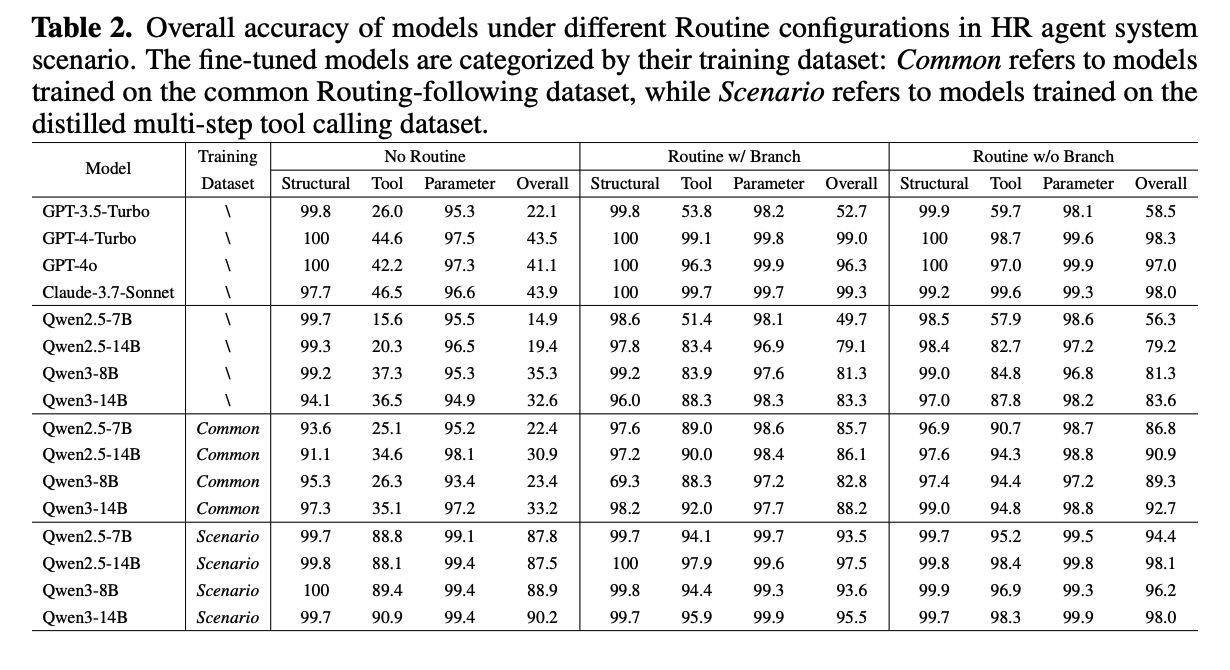

Dive into some tech research! A new paper explores why accuracy dips in memory-based systems when multiple routines get called. 🤔 Interestingly, it found that some smaller models actually performed better after exposure to more routines, all thanks to repetitive sub-steps helping with execution. This totally screams how vital high-precision retrieval is. Check it out!

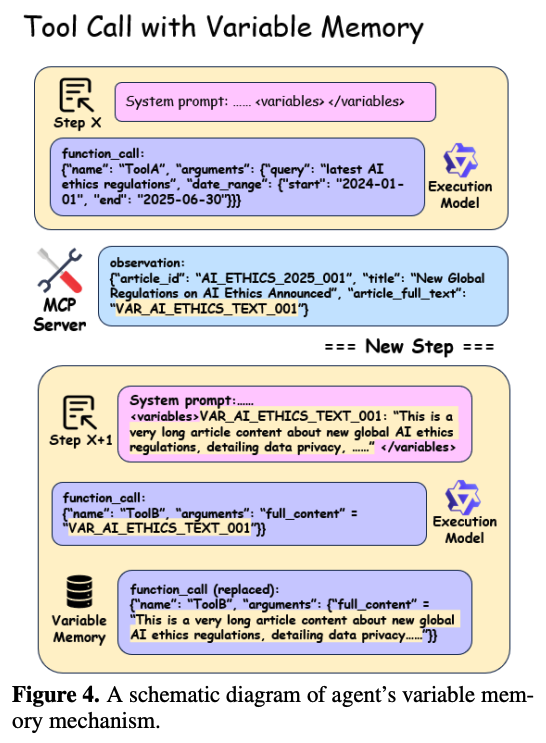

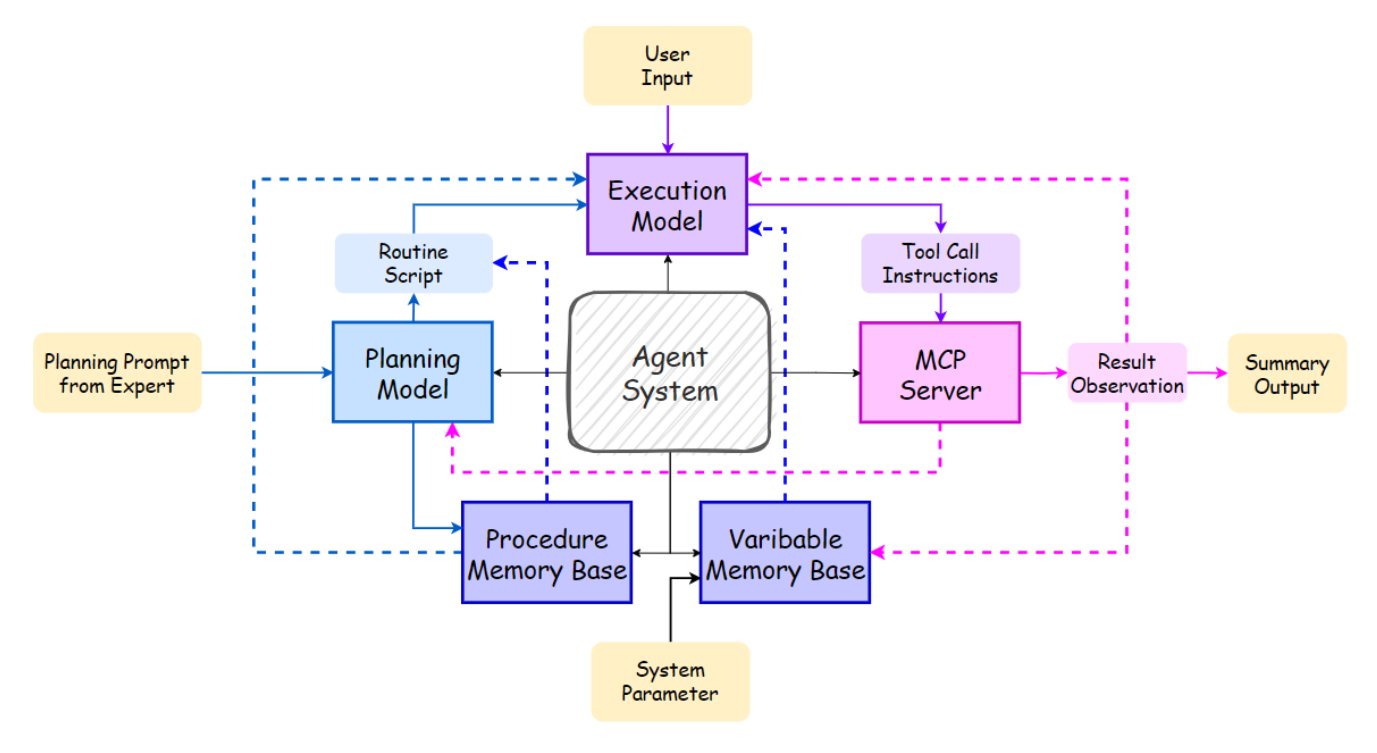

Big news for devs! Tool calling just got a sweet upgrade, boosting multi-step execution efficiency! 🚀 How? By using temporary variables to stash long parameter values, which slashes token overhead and context length pressure. It’s a game-changer for smoother operations!

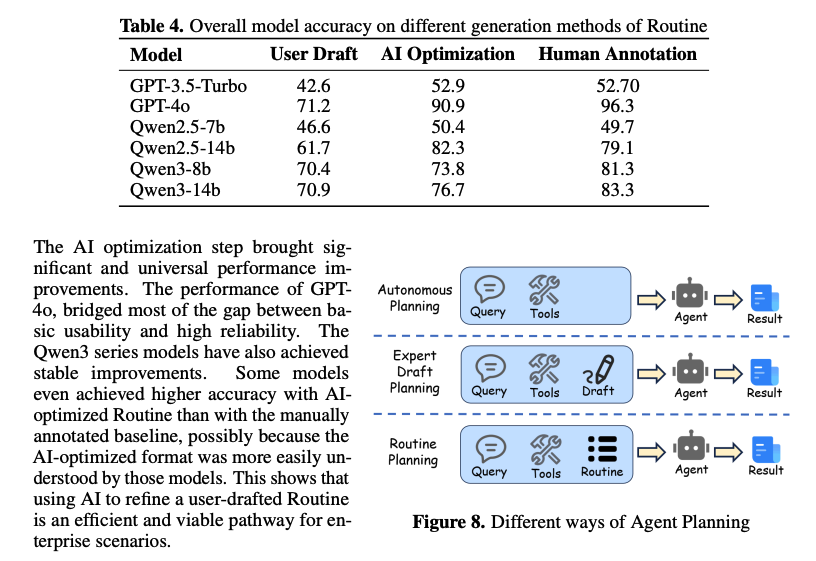

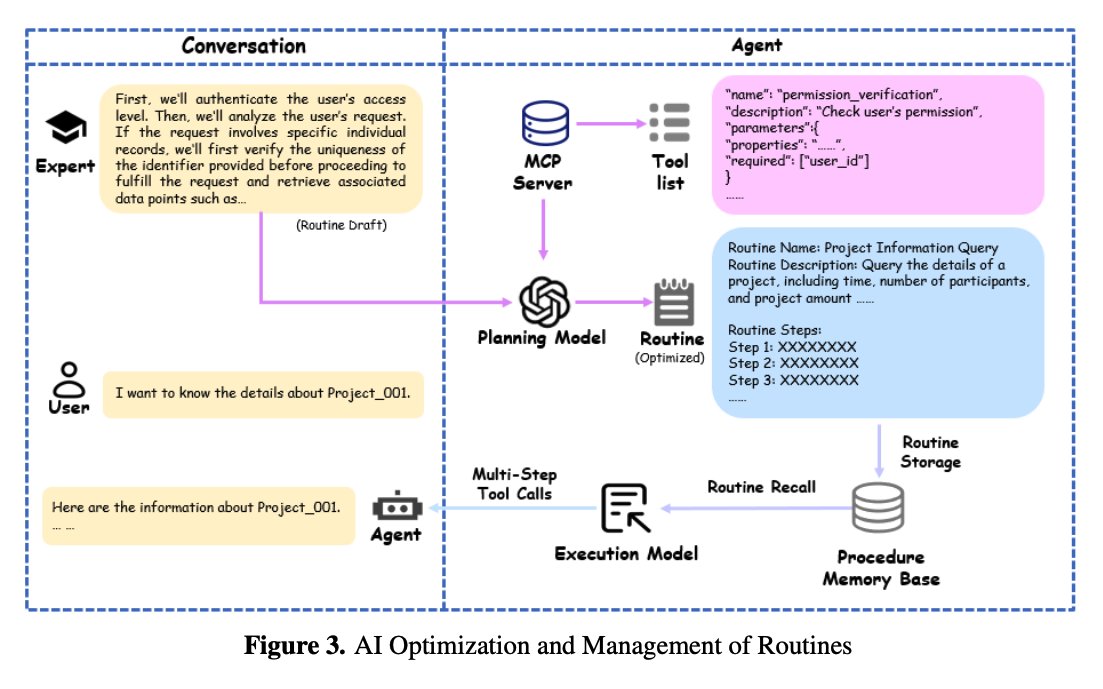

Get ready to supercharge your workflows! AI is totally optimizing processes, with Large Language Models (LLMs) now stepping up to help write them! ✍️ Using GPT-4o to optimize user-written workflows has shown accuracy rates almost on par with human-annotated levels. This signals that LLMs can definitely scale up workflow creation, but for top-tier performance, human review still reigns supreme. Teamwork makes the dream work!

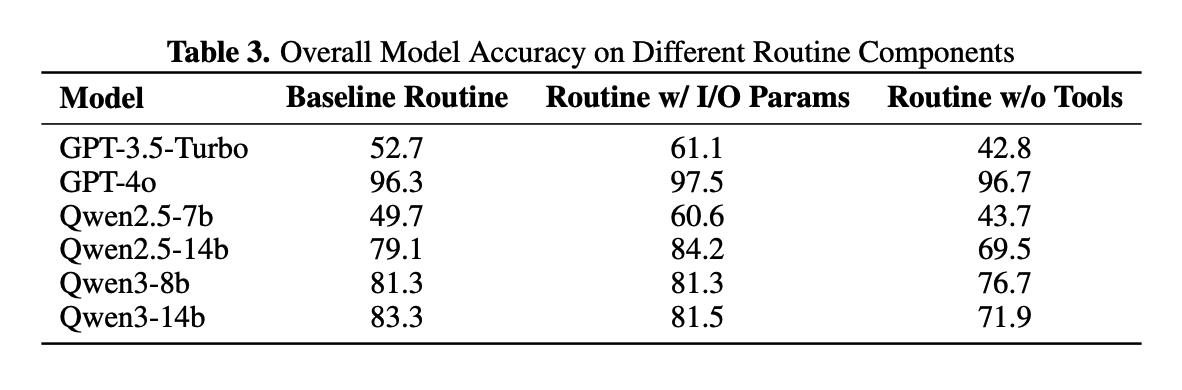

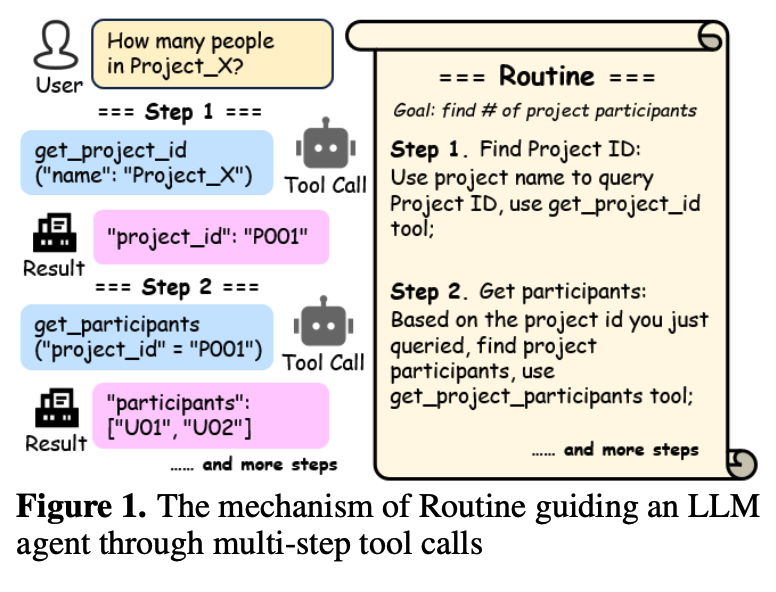

Listen up, workflow wizards! When it comes to workflow details, leaving out tool names and input/output descriptions is a HUGE no-no! 🙅♀️ Research proves that clearly including these in your process steps is absolutely crucial for success. Don’t skip the deets!

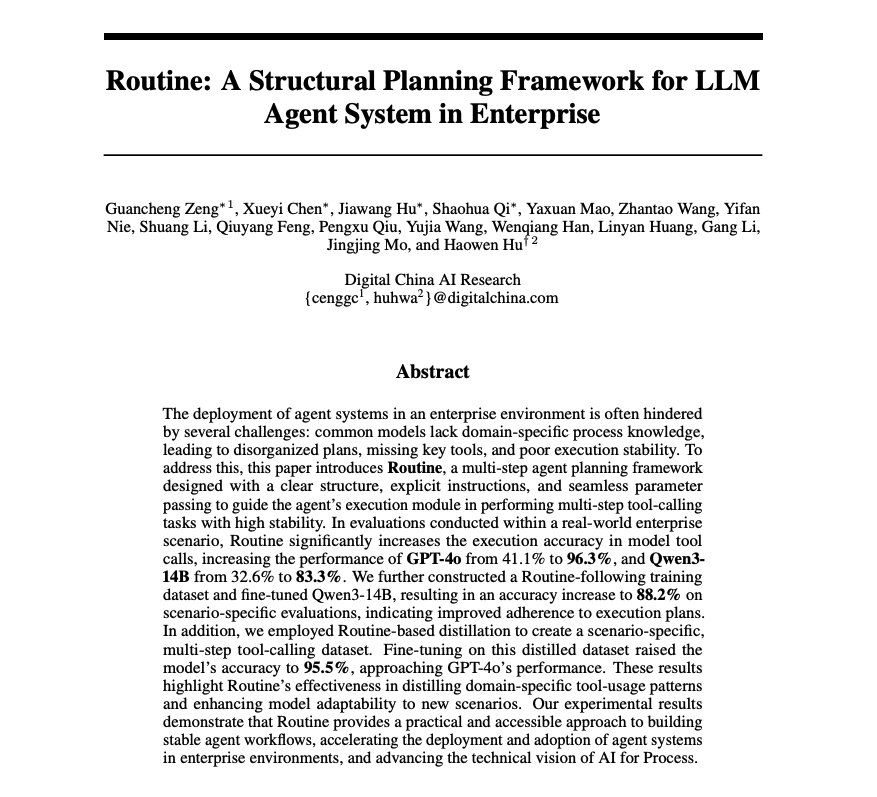

Alright, let’s talk about a total game-changer: the Routine framework is blowing minds with its performance in Large Language Model planning and execution! 🤯 This clever framework separates the planning of LLMs from the execution by smaller, instruction-tuned models, and it’s massively boosting accuracy. We’re talking GPT-4o’s accuracy jumping from 41.1% to a whopping 96.3%, and Qwen3-14B soaring from 32.6% to 83.3%! That’s insane!

So, what’s the lowdown on the Routine framework’s perks and quirks? ✨ This framework leverages mutable memory and modular tools, empowering even smaller models to reliably execute complex plans while keeping resource costs super low. Looking ahead, the next big thing is really digging into its limitations and figuring out how to crank up its efficiency and applicability even further. The future’s bright!

Let’s get technical! The Routine framework is all about structured planning for Large Language Model (LLM) agents! 📊 It’s dropping a more reliable and accurate way to tackle complex tasks, aiming to seriously boost the stability and precision of LLM agents when they’re handling multi-step tool-calling tasks in enterprise settings. That’s a big deal!

Let’s take a deep dive: The importance of planning and control mechanisms, plus the challenges of AI self-management and regulation! 🧠 This highlights a major trend in the AI world: we totally need more effective planning and control mechanisms to make sure LLMs are reliable and controllable in complex scenarios. It also sparks some serious thought about boosting AI’s “self-management” capabilities and the tricky challenges of future AI regulation. Big questions ahead!