08-03-Daily AI Daily

Yuansi Insights Daily Digest 2025/8/3

Yuansi Daily Report

AI Content Summary

Breakthroughs in multi-agent AI systems are shaking things up, with 360, Ant Group, and Tencent all launching related tech that showcases collective intelligence and the massive potential for AGI development. Meanwhile, a cheating scandal involving AI at Columbia University has sparked major discussions about academic integrity and educational models, especially with the AI tool Cluely boldly claiming "everything can be cheated." GPT-5's development is hitting some serious snags, facing talent drain and tech bottlenecks, leading OpenAI to explore new avenues like reinforcement learning.Today’s AI Buzz

✨The AI Swarm Era is Here! Multi-agent systems are making groundbreaking strides in artificial intelligence, signaling a whole new phase in AI development. We’re talking about a shift from single models to “swarm intelligence,” where multiple models team up and conquer tasks together. For example, 360 Group’s Nano AI has leveled up to an L4 “multi-agent swarm” system, empowering tens of thousands of AI agents to collaboratively tackle complex tasks with insane efficiency. Ant Group’s AWorld multi-agent framework absolutely crushed it in the IMO Math Competition, and guess what? They’ve open-sourced its code, showing off its “self-evolving” capabilities and giving us fresh vibes for AGI (Artificial General Intelligence) development. On another front, Tencent’s MixGRPO framework seriously boosted efficiency in image generation, proving that optimizing algorithms is just as crucial.

🔗 Project Repo 🔗 Project Repo

👀AI Cheating Scandal at Columbia & Cluely’s “Cheat All The Things” Vibe: Columbia University students, almost every single one of them, have reportedly been using AI to cheat, sparking a massive debate about academic integrity and educational models. Roy Lee, the founder of Cluely, boldly declared that “everything can be cheated.” His AI assistant, Cluely, boosts efficiency in all sorts of scenarios, even discreetly helping during interviews. This whole situation is pushing us to rethink the definition of cheating in the AI era and how traditional education systems can possibly adapt to this AI revolution.

🚧GPT-5’s Bumpy Road to Development: GPT-5 development is hitting major roadblocks, facing a “difficult birth” due to talent drain, technical bottlenecks (especially with inference models), and internal management chaos, all posing massive challenges to the project. GPT-5’s progress hasn’t met expectations, which means we need to be more realistic about our AI development speed forecasts. OpenAI is also exploring new tech avenues, like reinforcement learning, to push forward.

💻Swift Composable Architecture: This is a fantastic library for building Swift applications. It truly nails composability, testability, and ergonomics, aiming to help developers whip up more consistent and understandable apps. 🔗 Project Repo

✨Devs, Rejoice! FiraCode Monospaced Font is Here! FiraCode is a free monospaced font that comes packed with programming ligatures, making your code look super neat and beautiful. 🔗 Project Repo

☁️Kubernetes Cluster Management Godsend: KubeSphere! KubeSphere is a container platform tailor-made for Kubernetes, letting you effortlessly manage multi-cloud, data center, and edge environments. 🔗 Project Repo

📚A Goldmine of JavaScript Algorithms & Data Structures: This project is a treasure trove, offering tons of JavaScript algorithm and data structure implementations, complete with detailed explanations and links for further reading. 🔗 Project Repo

💡Quick Hits on Three Cool Projects:

the-book-of-secret-knowledgeis a cool project that gathers all sorts of checklists, manuals, cheatsheets, and more.VideoLingois another awesome project that automatically handles video subtitle cutting, translation, alignment, and even voiceovers. And then there’s the ongoing buzz about GPT-5, which is also briefly touched upon.🤯GPT-5: Is it a Donkey or a Horse? Let’s See It Run! News reports on GPT-5 are saying its performance bump isn’t that big. OpenAI is facing major tech challenges and talent drain, with GPT-5 showing some gains in programming and math, but not as much as folks hoped. Meta has been poaching a bunch of OpenAI’s talent, totally heating up the talent competition. OpenAI’s earlier Orion model didn’t hit the mark and ended up being released as GPT-4.5, which just goes to show that large model development is far from smooth sailing.

💰OpenAI: Rakes in $8.3 Billion in Funding, Valued at $300 Billion! OpenAI just bagged a whopping $8.3 billion in funding, pushing its valuation to a mind-blowing $300 billion! This totally shows the capital market’s huge confidence in AI tech, but it’s also got folks thinking: Is that high valuation actually justified?

🤔Tech Development Trends & Our Thoughts: Several projects and news items reflect the current AI tech development trends, pointing to automation, efficiency improvements, talent competition, and the delicate balance between technical bottlenecks and commercialization. Future AI tech development will definitely need continuous breakthroughs, more rational business models, and broader societal considerations.

✨Big Win! 19-Year-Old Dropouts Score $28 Million in Funding, Even OpenAI Invested! Conversion, a marketing automation company founded by two 19-year-old UC Berkeley dropouts, just snagged a sweet $28 million in Series A funding, with OpenAI even chipping in! This company is all about marketing automation, leveraging AI tech to boost efficiency for B2B businesses.

🚗Li Auto Unleashes VLA Advanced Driver-Assistance System: The Right Way to L3? Li Auto just dropped its VLA advanced driver-assistance system, which integrates a VLA (Vision-Language-Action large model). This system uses large models to empower cars to “think, communicate, remember, and self-improve.” Li Auto believes that VLA achieves a safer, smoother driving experience by bridging language intelligence with spatial intelligence and action commands. They’re also running simulation tests using world models.

🚀Westlake University Drops EPD-Solver: Boosts AI Image Generation Efficiency! Westlake University just unveiled EPD-Solver, a new diffusion model acceleration algorithm. This clever tech boosts image generation speed and quality by parallel computing gradients from multiple directions and performing weighted fusion. 🔗 Project Repo

🎉Google’s New Gemini Deep Think Model is Live! Mathematicians Already Proving Conjectures with It! Google’s latest Gemini 2.5 Deep Think model is live, and get this: mathematicians are already using it to prove conjectures! This model can think like a mathematician, simultaneously considering multiple solutions and continuously optimizing the final answer using parallel thinking and reinforcement learning technologies. 🔗 Gemini App

🤯Deep Cogito Open-Source Models Outperform DeepSeek, Costing Less Than $3.5 Million! Deep Cogito has open-sourced four hybrid inference models, with their 671B parameter MoE model actually beating out DeepSeek v3 and DeepSeek R1. They pulled this off using Iterative Distillation and Augmentation (IDA) technology, keeping training costs under $3.5 million. 🔗 Huggingface

🚗Dongfeng eπ Unleashes “Wings of Future” Strategy, 2026 eπ008 Starts at ¥173,600! Dongfeng eπ (Epi) Technology has just dropped its “Wings of Future” strategy, boasting six major tech foundations and partnering with top-tier companies like Huawei to build high-end smart products. The 2026 Dongfeng eπ008 is officially launched, with a limited-time early bird price starting from 173,600 yuan.

Overall, AI models have made significant strides in reasoning capabilities and efficiency. The automotive industry, meanwhile, is actively exploring intelligence and new energy. These advancements totally show off the massive potential of technological progress, but they’re also bringing new challenges and food for thought.

✨The 2025 Tencent Algorithm Competition is Heating Up! Over 8,400 AI pros globally are teaming up to tackle full-modality generative recommendation! The competition has drawn over 2,800 teams from nearly 30 countries, including over 340 top Chinese universities and 140 renowned international universities. Tencent is also hooking them up with its Angel Machine Learning Platform for tech support.

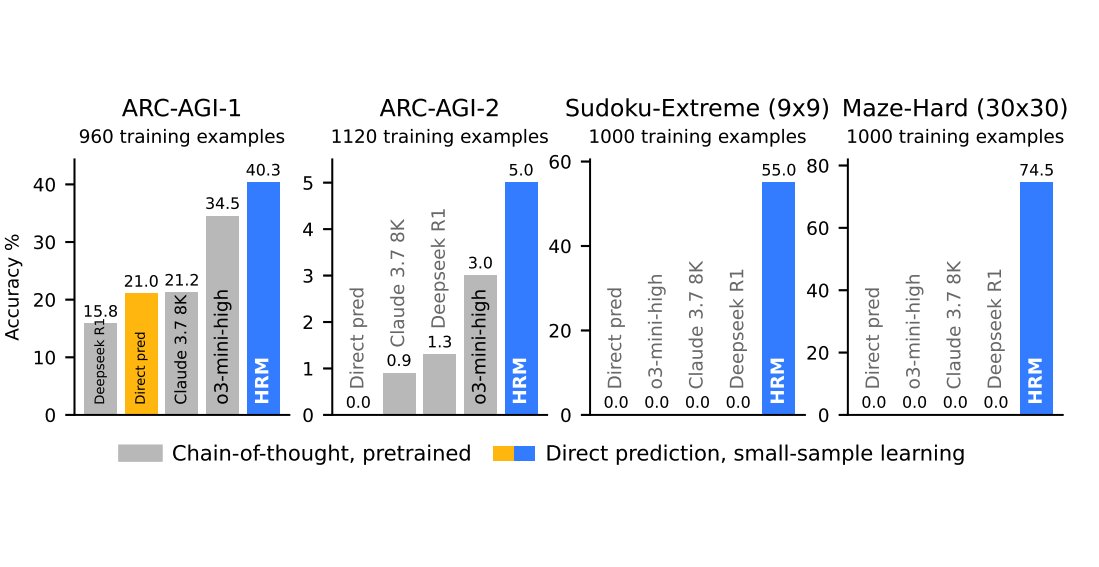

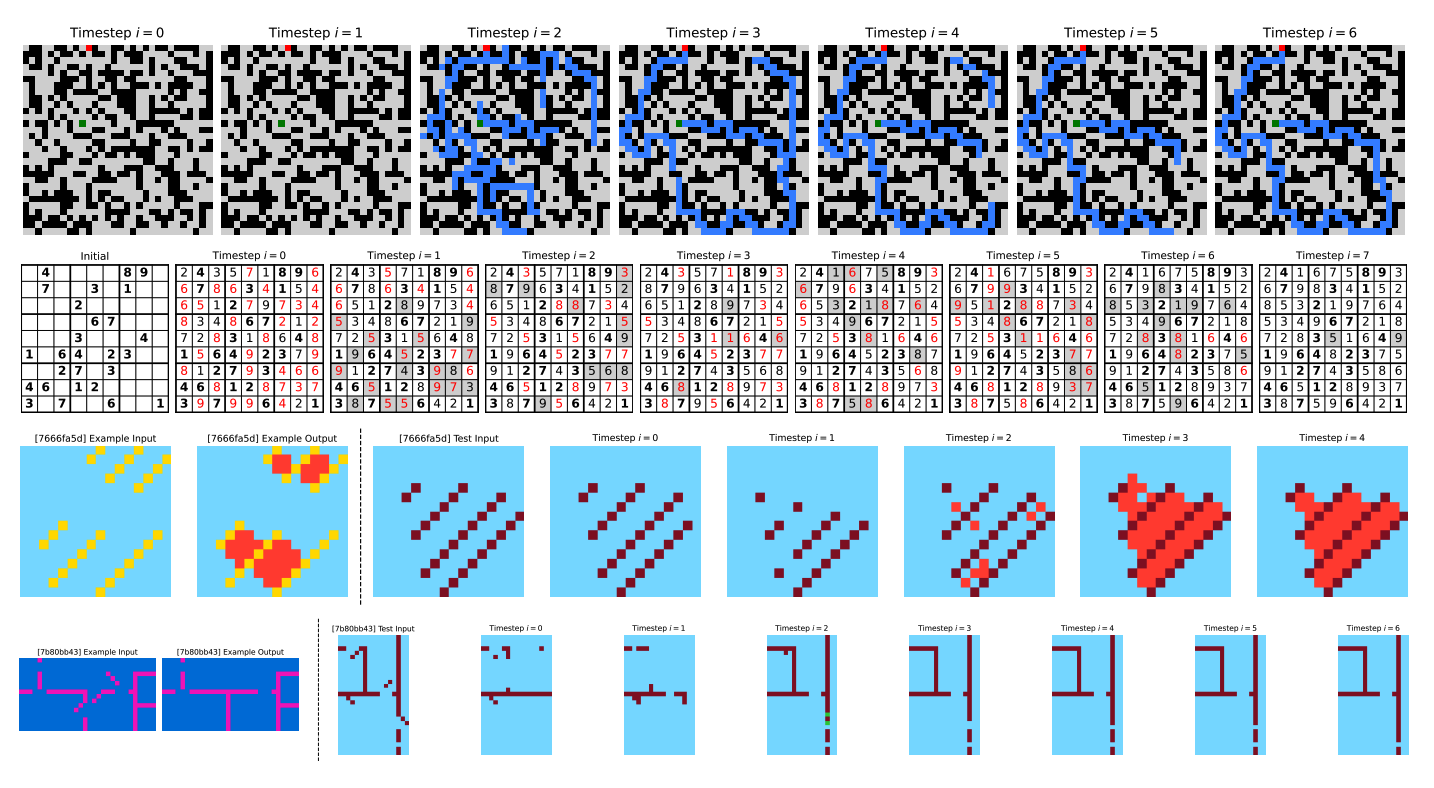

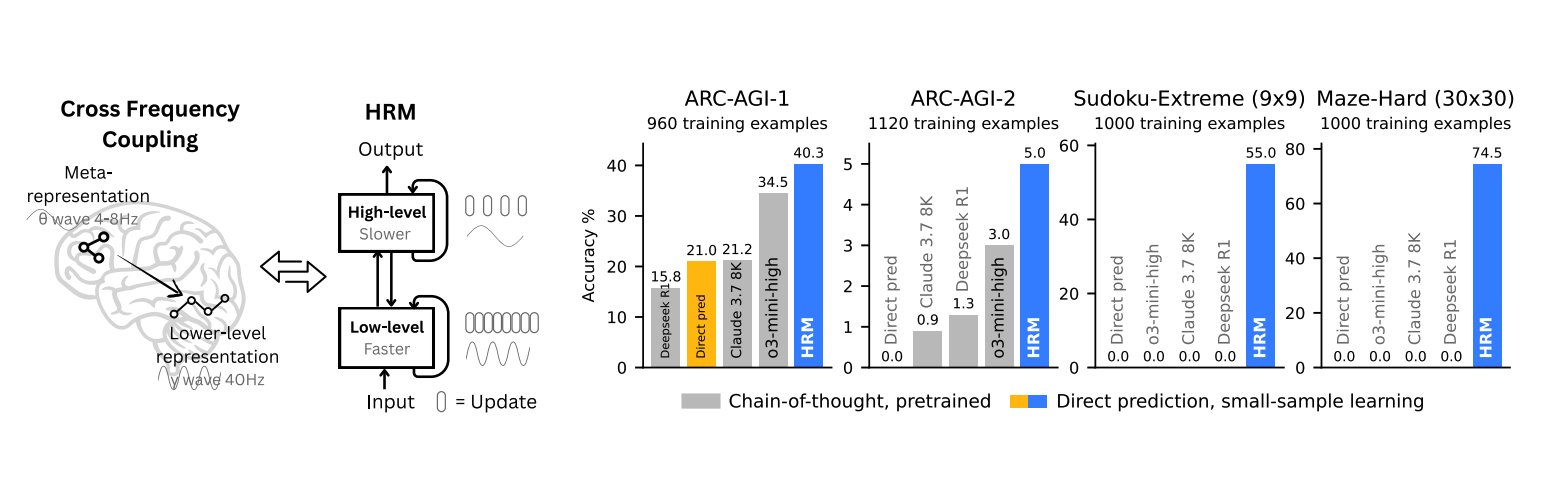

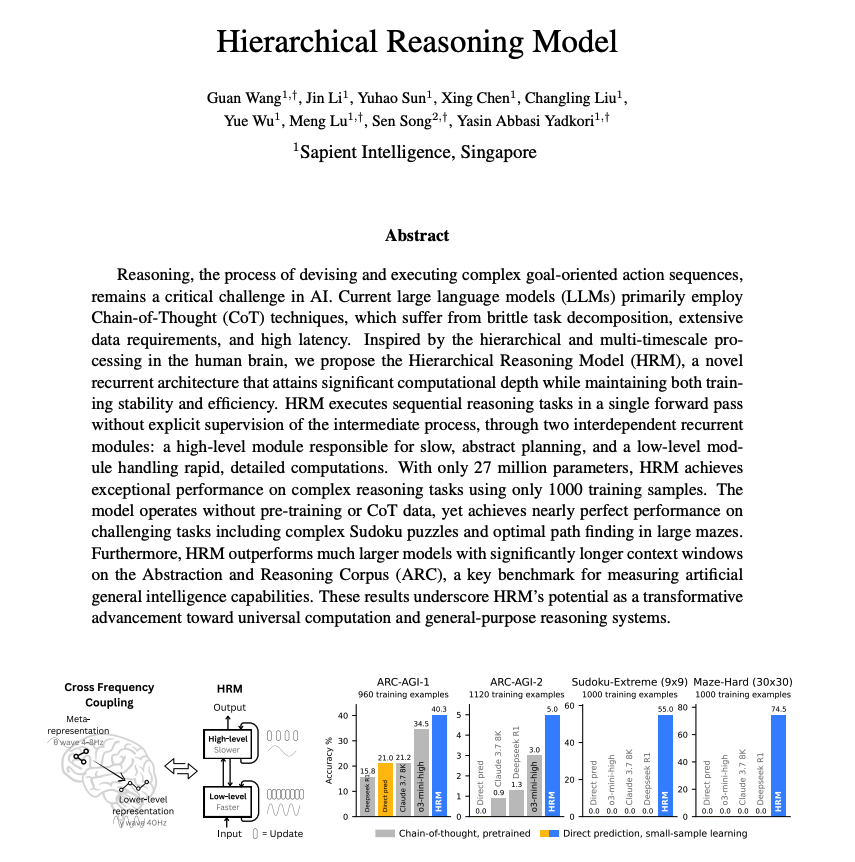

🚀New AI Model Breakthrough: HRM Algorithm Steals the Spotlight! An algorithm named HRM is pulling off astonishing feats in solving complex problems, crushing other large models in tests like ARC-AGI, extreme Sudoku, and maze puzzles. Research has revealed that the hierarchical dimension structure learned by HRM is similar to the brain’s cortex.

🔗 Paper Link

🔥AI Development: Opportunities and Challenges, All at Once! The breakthroughs from the Tencent Algorithm Competition and the HRM algorithm showcase the booming growth in the Artificial Intelligence field. However, it’s also crucial to consider the changes and potential risks brought by full-modality generative recommendation and the HRM algorithm.

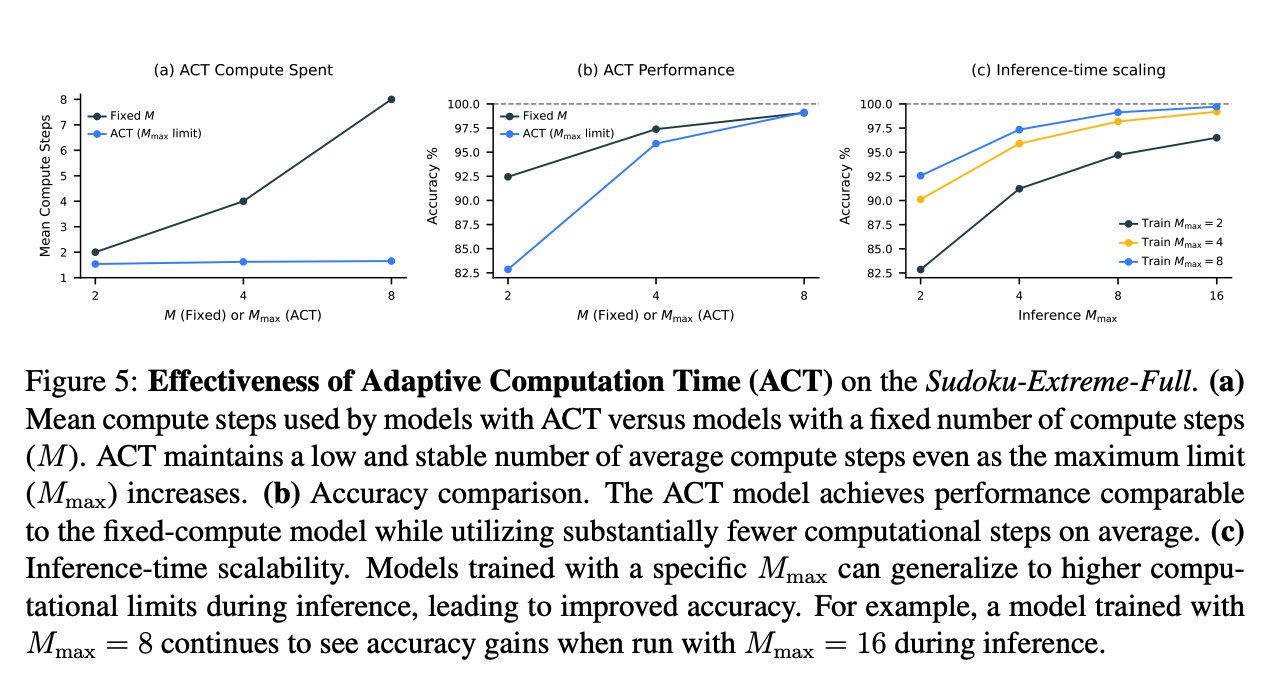

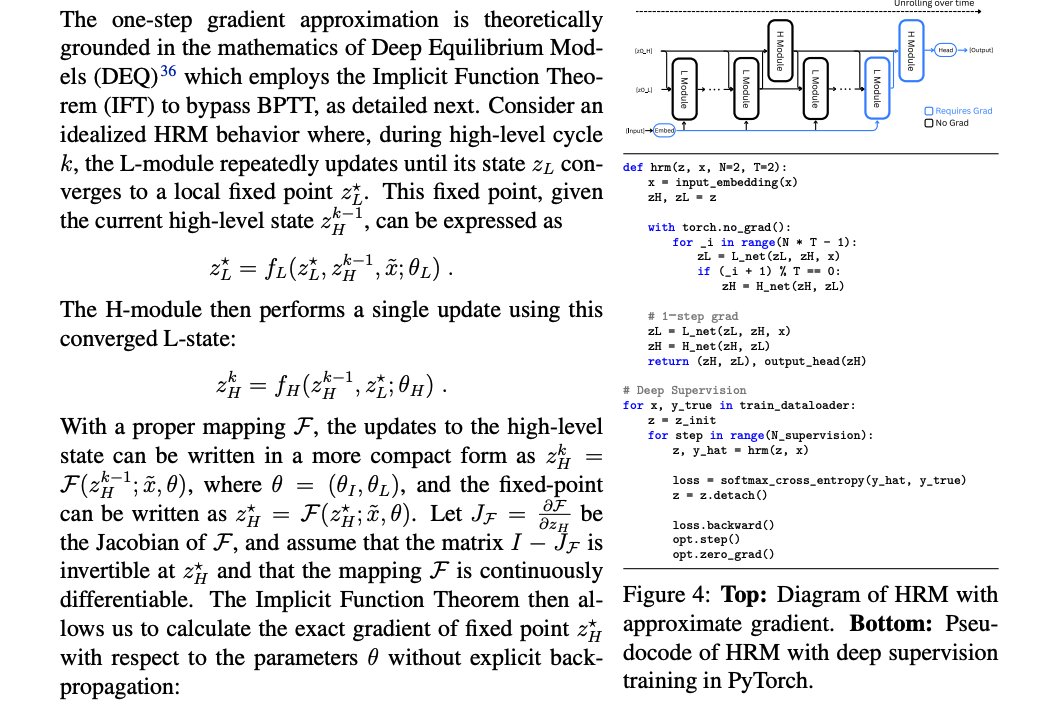

🧠Re HRM: A Smarter, More Efficient AI Model: Re HRM uses a Q-learning based stopping mechanism for adaptive computation time. It also introduces a hierarchical convergence mechanism and employs single-step gradient approximation to dodge memory-intensive backpropagation through time (BPTT).

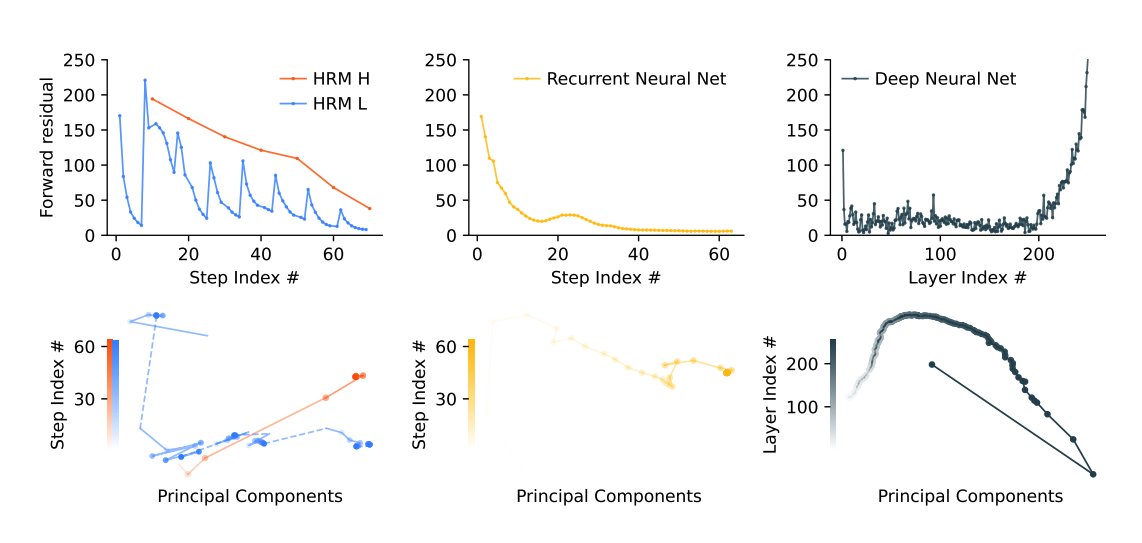

🤔Small Models Solving Complex Problems? Yep! The HRM model, with only 27 million parameters and about 1,000 training examples, can solve complex tasks that even large language models based on Chain-of-Thought (CoT) can’t crack. Its secret sauce? Its unique brain-like architecture, which uses two mutually cooperative recurrent neural networks.

🔮Future Outlook: HRM is challenging our traditional understanding of large models. The future might just see more AI models like HRM—lightweight, efficient, and low-power.

🤯Hierarchical Reasoning Model: A netizen shared their thoughts on the Hierarchical Reasoning Model, noting it achieves impressive hierarchical reasoning using a recurrent architecture.

⚡AI Industry Hot Takes! Anthropic has revoked OpenAI’s access to the Claude API. Interesting research suggests that intentionally making large language models (LLMs) “evil” during training might actually make them friendlier in the long run. Plus, Meta is pouring a ton of investment into AI data labeling. 🔗 Wired Report, 🔗 Technology Review Report, 🔗 IEEE Spectrum Report